AI: Between Hype and Caution

How do we make sense of AI's conflicting narratives and how do we take control of them?

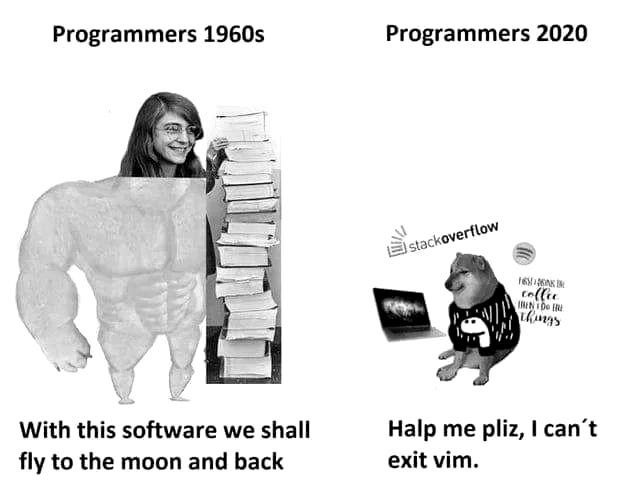

It is hard to keep up with all the perspectives on AI these days. Combing through online content, you’ll easily find contradictory views of what an AI-infused society will be, often coming from two equally decorated AI "veterans" who have spent 30 years in the trenches and (probably) coded the first neural networks in the original Turing Machine.

It may give you either great panic or relief to realize that very few of the thorny issues we face today as consequences of this online revolution were widely anticipated or talked about.

One we'll call the AI "pessimist," the cynical skeptic who sees AI as overhyped, underwhelming, and even a moral and ethical risk to society. The other we'll call the AI "optimist," the bright-eyed believer who sees great potential in everything AI touches, solving issues from cancer to climate change in one fell swoop. These wizened tech wizards are engaged in a duel of contradictory prophecies about our impending AI-pocalypse or utopia. You'd be forgiven for finding this confusing or wanting to tune it out. I sure do. But perhaps there is a way to make sense of it and duel our way to an actually, kind of extraordinary AI future.

I'm no AI expert; I'm just an enthusiast hoping to write my way to insight. But I grew up in the 1990s. I've already witnessed one massive digital transformation stomping through society like a bull in a china shop—the rise of Web 2.0. It may give you either great panic or relief to realize that very few of the thorny issues we face today as consequences of this online revolution were widely anticipated or talked about. Take the effect of social media on mental health. Who would have thought that a silly application called Instagram, where you upload selfies, would turn into a major contributor to young children's diminishing self-esteem and body acceptance? Yet, this issue has become so prominent and urgent today that even the US Congress is actually getting off their polarizing scream-o-coster to hold someone accountable (probably Instagram's parent, Meta, if the Universe Bends Towards Justice).

I see similar forces and debates playing out with AI, particularly generative AI, and I think this point in the rising tide is a good moment for some reflection and understanding. We need to get our bearings straight before we can tackle this behemoth. So, let's explore some different perspectives on AI's role in society, highlighting in broad strokes what they bring to the table, how they influence our view of AI today, and what lies ahead.

Two Camps, Two Visions.

With any disruptive technology, there are always two extremes—the "go-fast-and-break-things" yuppies who want to mainline it directly into society's artery and the "this-will-destroy-life-as-we-know-it" luddites who will spare no words telling you how horrible and dangerous it is. The tug-of-war over AI between these optimists and pessimists is happening right now in our governments, communities, industries, and public forums.

What are the pessimists about? Some of the most prominent narratives from this camp revolve around automation—that AI will replace human jobs—and supremacy—that AI will become more powerful than humans. These concerns, while maybe not unreasonable, are more likely long-term. Additionally, there are more nuanced criticisms that stem from careful consideration of AI's potential impacts in the near future, including automated decision-making systems affecting civil liberties, ethical dilemmas with autonomous weapons, socio-economic inequality, and privacy issues. The pessimists argue that AI's promise often doesn't align with its practical capabilities. For example, generative AI systems, like large language models, can produce coherent text, images, or even deepfakes, but they frequently lack true understanding. To critics, this superficiality poses dangers—whether it's misinformation, bias, or even the replacement of human autonomy. In their view, AI isn't just an overblown technological gimmick but a threat that could exacerbate inequality, invade privacy, and slowly automate away human purpose. Scary stuff indeed.

Perhaps the most horrible hellfire vision and the best rose-colored vision of AI are both unlikely to happen as people predict; more likely, we'll be somewhere in the middle, and the real issues will be entirely different from the ones we thought most likely.

On the other side, we have the optimists—those who believe AI is poised to revolutionize every aspect of our lives, from personalized education to solving complex societal issues. To them, AI holds the potential to amplify human creativity, streamline productivity, and address critical challenges in health, climate, and beyond. They are sold on the vision of AI seamlessly integrated into society, reducing friction and inefficiencies, empowering individuals, and democratizing access to knowledge and resources. For these enthusiasts, AI represents an engine of progress, one that we would be derelict not to see through to its full potential and one capable of bringing transformative benefits if deployed. Indeed, we've seen some pretty cool breakthroughs from AI: from generating realistic conversations, images, and videos with frontier models like DALL-E or Claude to even advancements in protein folding prediction with AlphaFold.

So which is it? Do I put on my Dr. Doom McAlarmist hat, fill my bunker with canned goods, and buy a manual on "How to Befriend our Robot Overlords"? Or do I draft a heartfelt welcome speech for when the AI gods descend from the Cloud and wait for Nirvana?

Meet You in the Middle?

When I was in my therapist's office the other week, he taught me a technique for dealing with my tendency to catastrophize. He told me, "Marc, think of the worst possible scenario first, spend a few minutes mulling that over in all its gory detail, then spend a few minutes thinking of the fuzzy best-case scenario. After that, think about what is most likely to happen. You see, the truth doesn't stick to the extremes; it's usually somewhere in the middle."

What can we infer from this nugget of wisdom besides the fact that my therapist may well be side-hustling as a philosopher? Well, perhaps it's that the AI’s most horrible hellfire vision and its best rose-colored vision are both unlikely to happen as people predict; more likely, we'll be somewhere in the middle, and the real issues will be entirely different from the ones we thought most likely.

Maybe we should spend less time arguing about the future of AI and more time arguing about the future of humans.

Big promises–good and bad–are being made about AI right now because it's new and exciting… and we're not even close to understanding it. The most experienced AI practitioners don't know. The folks on social media claiming that AI will "revolutionize" X, Y, and Z don't have a clue. The doomsayers who predict AI will hollow out society like a rotten stump don't know either. Like an 1850s gold rush town in California, we're all just speculating.

Both visions most likely will be partially true and false. The key is to listen carefully to each so that we can push ourselves towards the best of each. Avoid capture at one extreme so you don't constrain what AI could be, which is, just maybe, a genuinely beneficial technology that delivers without fatal side effects. We can wait in the middle for a real chance to envision something better than anyone can currently imagine. Remember the phrase, "To a hammer, everything looks like a nail?" Yes, it's a cliche. But as DFW said, "This, like many clichés, so lame and unexciting on the surface, actually expresses a great and terrible truth." Our beliefs about what AI is valuable for ultimately become our actual values towards the technology itself. We dictate what AI will become... if we resist the siren call of seeking certainty in what is fundamentally uncertain.

So What?

Ultimately, I think what causes people to lose faith and trust in AI, but really anything societal, be it a political system or a new technology, is the feeling of powerlessness. We forget that we influence how AI evolves, caught in a whirlwind of factors like corporate power, government control, and technological determinism. But, right now, this is the time to think seriously about how we want a technology like AI to contribute to our society, to meet the moment where we shape what AI means to us, what it does, and why.

Maybe we should spend less time arguing about the future of AI and more time arguing about the future of humans. What do we want that to look like? How do we give ourselves the power to own it?

Meet You in the Middle?